Researchers at Georgia Tech have taken a critical step forward in creating efficient, useful and brain-like artificial intelligence (AI). The key? A new algorithm that results in neural networks with internal structure more like the human brain.

The study, “TopoNets: High-Performing Vision and Language Models With Brain-Like Topography,” was awarded a spotlight at this year’s International Conference on Learning Representations (ICLR), a distinction given to only 2 percent of papers. The research was led by graduate student Mayukh Deb alongside School of Psychology Assistant Professor Apurva Ratan Murty.

Thirty-two of Tech’s computing, engineering, and science faculty represented the Institute at ICLR 2025, which is globally renowned for sharing cutting-edge research.

“We started with this idea because we saw that AI models are unstructured, while brains are exquisitely organized,” says first-author Deb. “Our models with internal structure showed more than a 20 percent boost in efficiency with almost no performance losses. And this is out-of-the-box — it’s broadly applicable to other models with no extra fine-tuning needed.”

For Murty, the research also underscores the importance of a rapidly growing field of research at the intersection of neuroscience and AI. “There's a major explosion in understanding intelligence right now,” he says. “The neuro-AI approach is exciting because it helps emulate human intelligence in machines, making AI more interpretable.”

“In addition to advancing AI, this type of research also benefits neuroscience because it informs a fundamental question: Why is our brain organized the way it is?,” Deb adds. “Making AI more interpretable helps everyone.”

Brain-inspired blueprints

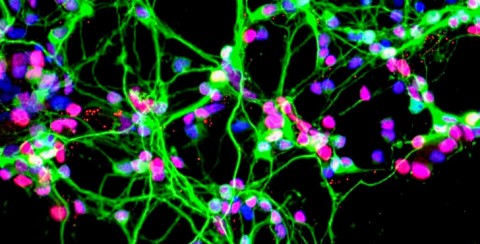

In the brain, neurons form topographic maps: neurons used for comparable tasks are closer together. The researchers applied this concept to AI by organizing how internal components (like artificial neurons) connect and process information.

This type of organization has been tried in the past but has been challenging, Murty says. “Historically, rules constraining how the AI could structure itself often resulted in lower-performing models. We realized that for this type of biophysical constraint, you simply can’t map everything — you need an algorithmic solution.”

“Our key insight was an algorithmic trick that gives the same structure as brains without enforcing things that models don't respond well to,” he adds. “That breakthrough was what Mayukh (Deb) worked on.”

The algorithm, called TopoLoss, uses a loss function to encourage brain-like organization in artificial neural networks, and it is compatible with many AI systems capable of understanding language and images.

“The resulting training method, TopoNets, is very flexible and broadly applicable,” Murty says. “You can apply it to contemporary models very easily, which is a critical advancement when compared to previous methods.”

Neuro-AI innovations

Murty and Deb plan to continue refining and designing brain-inspired AI systems. “All parts of the brain have some organization — we want to expand into other domains,” Deb says. “On the neuroscience side of things, we want to discover new kinds of organization in brains using these topographic systems.”

Deb also cites possibilities in robotics, especially in situations like space exploration where resources are limited. “Imagine running a model inside a robot with limited power,” he says. “Structured models can help us achieve 80 percent of performance with just 20 percent of energy consumption, saving valuable energy and space. This is still experimental, but it's the direction we are interested in exploring.”

“This success highlights the potential of a new approach, designing systems that benefit both neuroscience and AI — and beyond,” Murty adds. “We can learn so much from the human brain, and this project shows that brain-inspired systems can help current AI be better. We hope our work stimulates this conversation.”

Written by Selena Langner

Contact: Jess Hunt-Ralston