For many stroke victims with upper limb motor function impairment, previously simple tasks like reaching for that pen or grabbing that glass of water feel impossible. But the power of imagination and intention may help: If you can think it, eventually you can grab it.

Mental practice – such as motor imagery and action observation – is an effective intervention in restoring upper limb functionality. The challenge is that the performance quality and efficacy of mental practice can vary significantly between individuals.

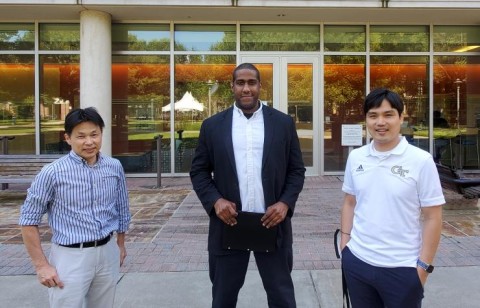

A team of researchers at the Georgia Institute of Technology, led by principal investigator Minoru “Shino” Shinohara, is addressing that challenge, developing what he calls, “a new paradigm of motor imagery” – integrating proven methods of neuromotor facilitation with robotic prostheses. The researchers want to help stroke survivors turn thoughts and intentions into useful actions.

Shinohara, associate professor in the School of Biological Sciences, is collaborating with co-investigators Frank Hammond, assistant professor of mechanical engineering and biomedical engineering, and Woo-Hong Yeo, associate professor of mechanical engineering. And the National Institutes of Health (NIH) is supporting their work with a $275,000, 18-month R21 grant for the project titled: “Robotically Augmented Mental Practice for Neuromotor Facilitation.”

“The idea here is that people who have had a stroke often have a kind of contracted posture, like this,” said Shinohara, who illustrated his point by contorting his upper body accordingly.

“And when they try to reach for something, or to extend the reach of their arm, they use their trunk, because they don’t have good, fluid arm motion,” Shinohara added, hiking up his right shoulder and thrusting it forward, as an example.

This kind of intentional synergistic movement is common in people with motor impairment caused by stroke or other neurological impairments. Lacking the fine motor skills to activate their arms or hands, this broad movement often is the best that they can do, particularly in the early stages of recovery. The movement is a sign that the brain can’t correctly send signals to affected muscles. But it is also a sign that the brain is trying to relearn how, and that’s what Shinohara and his team want to build upon.

“It’s possible that this motion can increase neural excitability of the hand muscles for opening – that it is related to a more coordinated motion, like grabbing the glass,” Shinohara said. “We want to utilize this trunk motion for actually opening and closing a robotic prosthesis.”

The researchers hypothesize that control and observation of robotic grasp and release actions via this shoulder and trunk motion – synergistic proximal muscle activation – will increase hand excitability, due to the cognitive engagement with an externally present and visible robotic prosthesis. That is, the individual thinks about grabbing the object and makes the corresponding shoulder and trunk motion, activating a robotic hand.

Shinohara believes this robotically augmented mental practice can help the brain efficiently relearn how to produce and send the right signals at the right time to the affected hand muscles.

“You may not be able to use your own hands, but you’ll see the corresponding action of the prosthetic reacting, as if it’s your grip and you are opening and closing,” he said. “That’s action observation. So, if you see the robot and you’re engaged in controlling the action, we expect to see an increase in the ability of the brain to control the hand. That’s the basic idea.”

To test the idea, Shinohara, director of the Human Neuromuscular Physiology Lab and a member of both the Petit Institute for Bioengineering and Bioscience and the Institute for Robotics and Intelligent Machines (all at Georgia Tech), is partnering with the labs of Yeo and Hammond.

Yeo has developed cutting edge motor imagery-based brain-machine interface (BMI) systems – rehabilitation technology that analyzes a person’s brain signals, then translates that neural activity into commands enabled by flexible scalp electronics and deep-learning algorithms.

A member of several Georgia Tech research institutes, Yeo is principal investigator of the Bio-Interfaced Translational Nanoengineering Group and director of the Center for Human-Centric Interfaces and Engineering. Currently. He is developing an algorithm for detecting trunk motion in this project.

As principal investigator of the Adaptive Robotic Manipulation (ARM) Laboratory, Hammond’s research is focused on a variety of topics in robotics, including sensory feedback enabled human augmentation devices.

For this project, Hammond’s lab is developing a robotic arm that could potentially restore some neuromotor functionality to patients in the future, he said, “and provide greater degrees of motor imagery. The data we generate will be helpful in creating a robotic device that will be a lot more effective in treatment and maybe more versatile, allowing us to accommodate a broader population of patients undergoing rehabilitation.”

The NIH’s R21 grants are intended to encourage developmental or exploratory research at the early stages of project development, with the hope that the work can lead to further advances in the research. Shinohara believes he and his collaborators are moving in that direction.

“Development of this new paradigm and its integration with able-bodied and post-stroke disabled individuals will open new scientific and clinical concepts and studies on augmented motor imagery,” Shinohara said. “And that can lead to effective treatment strategies for people with neuromotor impairment.”

Writer: Jerry Grillo