A leap forward in artificial intelligence control from Georgia Tech engineers could one day make robotic assistance for everyday activities as easy as putting on a pair of pants.

Researchers have developed a task-agnostic controller for robotic exoskeletons that’s capable of assisting users with all kinds of leg movements, including ones the AI has never seen before.

It’s the first controller able to support a dozens of realistic human lower limb movements, including dynamic actions like lunging and jumping, as well as more typical unstructured movements like starting and stopping, twisting, and meandering.

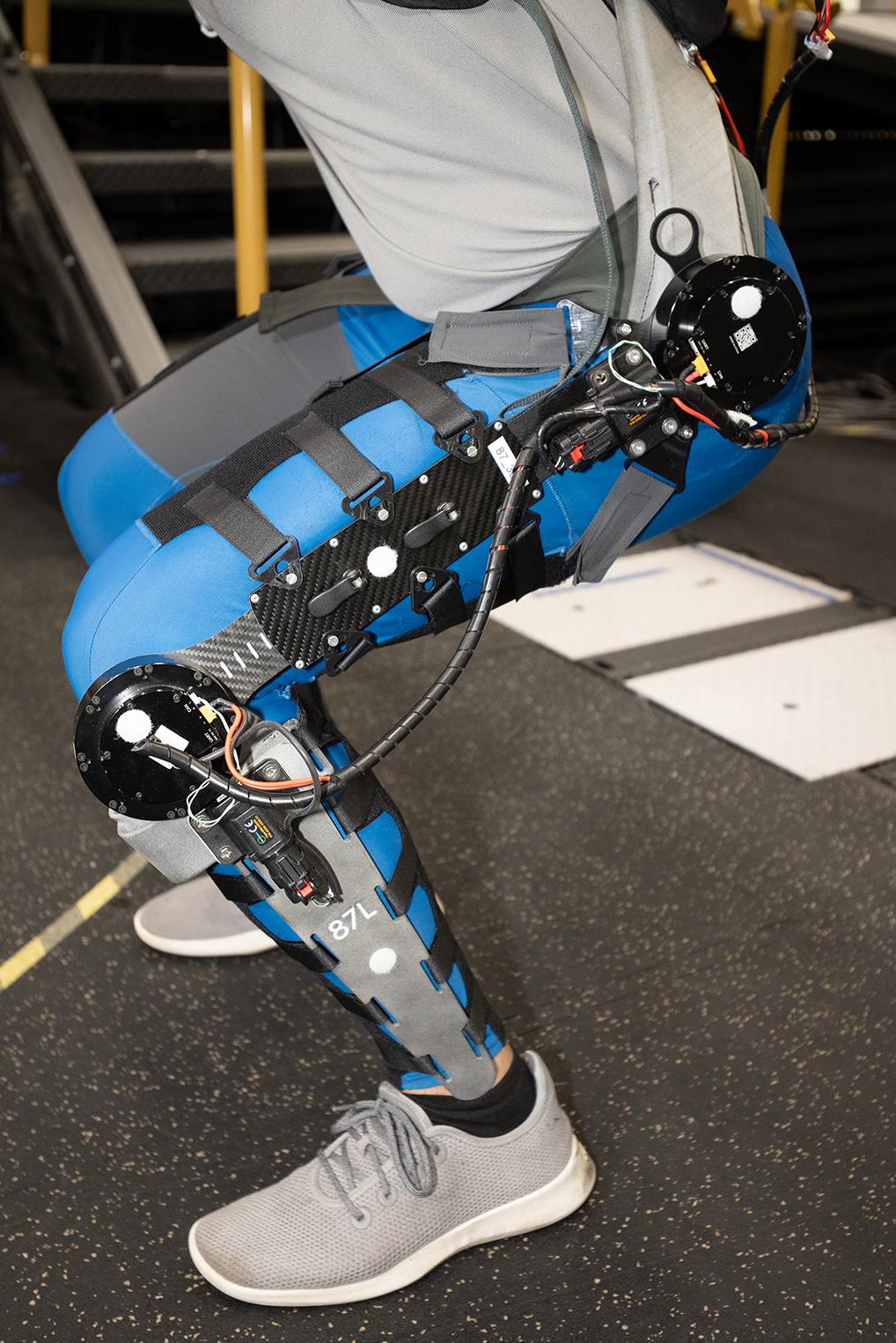

Paired with a slimmed down exoskeleton integrated into a pair of athletic pants that was designed by X, “The Moonshot Factory,” the system requires no calibration or training. Users can put on the device, activate the controller, and go.

The study was led by researchers in the George W. Woodruff School of Mechanical Engineering (ME) and the Georgia Tech Institute for Robotics and Intelligent Machines.

Their system takes a first big step toward devices that could help people navigate the real world, not just the controlled environment of a lab. That could mean helping airline baggage handlers move hundreds of suitcases or factory workers with heavy, labor-intensive tasks. It could also mean improving mobility for older adults or stroke patients who can’t get around as well as they used to.

“The idea is to provide real human augmentation across the high diversity of tasks that people do in their everyday lives, and that could be for clinical applications, industrial applications, recreation, or the military,” said Aaron Young, ME associate professor and the senior researcher on a study describing the controller published Nov. 13 in the journal Nature.

What made it possible for the controller to accurately boost hip and knee joint movements was a whole new approach to the data that feeds the machine learning algorithms.

Instead of predicting the task the user is trying to do — say, climbing stairs or lifting a heavy object — the researchers used sensors to instantaneously detect and estimate the human user’s internal joint efforts. That allowed the hip and knee exoskeleton device in the study to provide a 15% to 20% boost to those joints, making it easier for users to do those activities.

“What's so cool about the system is, you put it on and now it's part of you. It's adapting to you. It's moving with you. There's no dependency on exactly what you're doing for the exoskeleton,” said Dean Molinaro, a lead author of the Nature study and a former robotics Ph.D. student. “This was kind of a big swing to say, we're willing to not only take a different crack at how we approach exoskeleton control, but also to bank on machine learning as that translator. All the pieces that had to align to realize this type of task-agnostic control felt like a bunch of tiny miracles.”

The research team included, left to right, Keaton Scherpereel, Associate Professor Aaron Young, Dean Molinaro, and Ethan Schonhaut.

The controller described in Nature builds on previous work where the team created a unified exoskeleton controller that could support walking, standing, and climbing stairs or ramps without user intervention.

Their new controller expands far beyond those activities and was able to offer seamless assistance across the range of natural human movement, including the transient and sometimes halting motions common in daily life. It also generalized, meaning it worked for activities that weren’t part of the data used to train the algorithms.

The team collected data on 28 different tasks. They included climbing stairs and ramps, running, walking backwards, jumping up or across, squatting, lifting and placing a weight, turning and twisting, tug-of-war, and calisthenics.

Even with the added weight of the exoskeleton, users expended less energy, and their joints didn’t have to work as hard on most of the tasks the researchers tested. On the few activities with no measurable benefit from the exo, users still experienced significant compensation for the extra weight of wearing the device.

Most work on exoskeleton devices has focused on a single joint. Devices for both the hip and knee typically are slow and show little metabolic benefit. Using biological data from the joints as the key driver overcomes those limitations.

“Coordinating two joints, and being able to augment both simultaneously, is something engineers have struggled with because of the codependency on the joints,” Young said. “That's a beautiful part of our strategy that relies on internal human states: you can then coordinate the two joints naturally and rather effortlessly. That's huge from an engineering standpoint.”

The exoskeleton involved in the group’s trials also is new. Instead of a bulky, robotic device worn on top of a user’s clothes, the device was integrated into a pair of athletic pants. It was designed by X, "The Moonshot Factory," an initiative formerly known as Google X that aims to invent radical technologies to solve big problems. X helped fund the research and worked with Young’s team to create the “clothing-integrated” exoskeleton.

Work on the “exo-pants” idea continues at Skip, a company spun out of X and where the study’s other lead author, Keaton Scherpereel, is now an exoskeleton control engineer. The idea is to move this kind of robotic assistance — in terms of both function and ease of use — toward the real world. Slipping on a pair of pants that can support the whole range of daily movement would be far more practical than the reality today.

Next steps include finding ways to incorporate other physiological data that could further improve the controller and adapting the controller for different devices.

“We’ve proved this approach works really well, but it requires a lot of data,” said Scherpereel, also a former robotics Ph.D. student. “What happens when we have a different exoskeleton, change the design of the exoskeleton, or move sensors? We want to handle those changes in a way that allows us to take advantage of the data we’ve already collected.”

Along with Molinaro, Scherpereel, and Young, the research team included Ph.D. student Ethan Schonhaut, Georgios Evangelopoulos at Google, and Max Shepherd at Northeastern University.

“This is the floor of how good we can do and still the very early phases of these kinds of controllers,” Young said. “Now that we have these amazing deep learning algorithms and this fundamental idea of using the internal human state, the capabilities are pretty endless for where this technology can go.”

The prototype robotic exoskeleton use in the research was integrated into a pair of athletic pants. X, The Moonshot Factory, worked with Young’s team to create the “clothing-integrated” exoskeleton and helped fund the team's AI controller research.